This comprehensive guide explores ethical design principles for digital platforms, providing practical guidance for creating products that prioritize user trust, protect human rights, and contribute positively to society and the environment. User-centered design is the cornerstone of ethical design, prioritizing the needs, preferences, and experiences of users. Understanding and addressing diverse user needs is fundamental to ethical design, ensuring that products are accessible and inclusive for everyone. It is also crucial to consider digital literacy when designing inclusive digital platforms, so users with varying levels of digital skills can interact effectively. Whether you’re a designer, developer, product manager, or business leader, understanding these principles isn’t just a trend—it’s essential for building sustainable, socially responsible digital products that truly empower users.

Table of Contents

ToggleWhat Are Ethical Design Principles for Digital Platforms?

Ethical design principles for digital platforms represent fundamental guidelines that prioritize user well-being, privacy, and autonomy throughout the digital product development process. Unlike traditional design approaches that might focus primarily on business metrics or engagement, ethical design refers to a systematic framework that considers the broader impact of design decisions on individuals and society. Moreover, ethical design practices can prevent legal and ethical issues in web design, such as data breaches and discriminatory practices, ensuring platforms operate responsibly.

These principles ensure platforms operate transparently, inclusively, and sustainably while respecting user rights and societal values. They address the reality that digital platforms are not neutral tools but powerful socio-technical systems that can either enhance or diminish human flourishing.

The core principles include user consent, accessibility, transparency, privacy protection, and avoiding manipulative design patterns that trick users into unwanted actions. Understanding and addressing user needs is essential to ensure that digital platforms are accessible and unbiased for people of different abilities, backgrounds, and cognitive styles. User well-being considers physical and mental health in design, focusing on positive user experiences rather than engagement at all costs. Ethical UX design means being upfront and clear in design patterns to foster trust with users. However, ethical design extends beyond avoiding harm—it actively seeks to create positive outcomes for diverse user groups and different cultural contexts.

Implementation requires systematic integration throughout the design process, from initial planning to post-launch monitoring. This means conducting user research with diverse perspectives, implementing ongoing evaluation of ethical performance, and maintaining continuous learning about the real world examples where design decisions impact user experiences.

Ethical design principles serve as both practical guidance and organizational mission alignment tools. They help teams make informed decisions when facing trade-offs between business objectives and user well being, ensuring that core principles guide product development rather than being treated as optional add-ons.

The Seven Core Ethical Design Principles

User Autonomy and Informed Consent

User autonomy forms the foundation of ethical digital platform design. This principle demands that platforms provide clear, understandable information about data collection, usage, and sharing practices, enabling users to make informed decisions about their participation. User autonomy in UX design emphasizes providing users with control over their interactions within a digital interface.

Effective implementation means moving beyond legal compliance to genuine transparency. Instead of burying important information in confusing language, ethical platforms use plain language summaries, visual explanations, and progressive disclosure to help users understand exactly what they’re agreeing to. The goal is ensuring users can easily opt out of features or data sharing they don’t want. It is also crucial to avoid manipulative design patterns that deceive users into taking unintended actions, as such practices not only undermine user autonomy but also pose significant ethical and legal risks.

Real world examples demonstrate this principle in action. Apple’s App Tracking Transparency feature requires apps to explicitly request permission before tracking users across other applications. Users see clear explanations of why apps want to track them and can easily deny permission. Similarly, GDPR-compliant consent banners that allow granular control over different types of data usage represent meaningful implementation of user autonomy.

Granular privacy controls are essential for respecting user autonomy. This means allowing users to customize their experience preferences, choose which notifications they receive, and control how their data is used for recommendations or advertising. The principle extends to account deletion, data portability, and the ability to modify or correct personal information.

Accessibility and Universal Design

Accessibility ensures that digital platforms work effectively for users with diverse abilities, creating inclusive experiences that don’t exclude anyone based on disability, age, or technical proficiency. When designing accessible interfaces, it is essential to consider digital literacy, ensuring that users with varying levels of digital skills can interact effectively with the platform. This principle requires following established standards while going beyond minimum compliance to embrace universal design approaches.

Technical accessibility implementation includes following WCAG 2.1 AA guidelines, which address visual, auditory, motor, and cognitive accessibility needs. This means implementing keyboard navigation for users who can’t use a mouse, ensuring screen reader compatibility for visually impaired users, providing captions for video content, and designing with sufficient color contrast for users with visual impairments.

Cognitive accessibility often receives less attention but is equally important. This involves using clear language, maintaining consistent navigation patterns, providing error prevention and recovery mechanisms, and avoiding overwhelming interfaces that cause confusion or anxiety. Design solutions should anticipate user mistakes and provide helpful guidance rather than punishment.

Microsoft’s inclusive design toolkit exemplifies comprehensive accessibility implementation. Their approach considers temporary, situational, and permanent disabilities, recognizing that accessibility improvements benefit all users. For example, voice controls designed for users with motor disabilities also help people with hands-free needs while driving or cooking.

The BBC iPlayer’s accessibility features demonstrate how platforms can exceed basic requirements. They provide audio descriptions, sign language interpretation, customizable subtitles, and simplified navigation modes. These features weren’t added as afterthoughts but were integrated into the core design process from the beginning.

Transparency and Honest Communication

Transparency requires platforms to clearly explain how they operate, what influences user experiences, and why certain content or recommendations appear. This principle addresses the “black box” problem where users interact with complex systems without understanding how those systems make decisions that affect them.

Algorithmic transparency is particularly crucial as ai systems increasingly determine what content users see, which products are recommended, and how their data is processed. Users deserve to understand the basic logic behind these automated decisions, even when the technical details are complex.

Practical implementation involves providing plain-language explanations of how recommendation algorithms work, what data influences those recommendations, and how users can modify their experience. This includes labeling AI-generated content, clearly marking sponsored posts or advertising, and explaining why certain content is promoted or demoted.

YouTube’s transparency reports and algorithm explanation videos represent meaningful progress in this area. They explain how the recommendation system balances factors like user history, trending content, and content quality to determine what appears in users’ feeds. TikTok has similarly begun providing users with more information about why specific videos appear in their “For You” page.

Privacy policies and terms of service must also embrace transparency principles. This means providing visual summaries, using plain language, and organizing information logically rather than hiding important details in lengthy legal documents. Users should be able to quickly understand their rights, how their data will be used, and what choices they have.

Privacy Protection and Data Minimization

Privacy protection involves collecting only the data necessary for core platform functionality while implementing robust security measures to protect user information. Ethical considerations in UX design include minimizing digital carbon footprints and promoting sustainable user behaviors. This principle challenges the traditional approach of collecting as much data as possible and instead emphasizes purposeful, minimal data collection practices. Ethical design practices can also prevent legal issues related to deceptive UX behaviors, ensuring compliance with evolving regulations.

Data minimization requires platforms to clearly identify what information they actually need to provide their service and avoid collecting additional data “just in case” it might be useful later. This approach reduces privacy risks, simplifies compliance with data protection regulations, and builds user trust by demonstrating respect for personal information.

Implementation involves conducting regular security audits, implementing end-to-end encryption where appropriate, providing secure data storage with access controls, and establishing clear data retention policies. Platforms should also provide users with data portability options and the right to deletion, making it straightforward to access, correct, or remove personal information.

Signal’s messaging platform exemplifies privacy-focused design by collecting minimal user data and implementing robust encryption. Ethical design enhances user trust and loyalty by prioritizing user well-being and interests. They’ve designed their system so that even Signal cannot access user conversations, demonstrating how privacy protection can be built into the fundamental architecture rather than added as a surface feature.

DuckDuckGo’s search approach similarly demonstrates privacy-first design. They don’t track users, store personal information, or create advertising profiles based on search history. This shows how business models can align with privacy principles when organizations commit to protecting user data rather than monetizing it.

Fairness and Non-Discrimination

Fairness requires that platform algorithms, content moderation systems, and user interfaces treat all users equitably regardless of their background, demographics, or other characteristics. This principle addresses both intentional discrimination and unintended bias that can emerge from automated systems.

Algorithmic bias often occurs when machine learning systems trained on historical data perpetuate past discrimination or when datasets don’t adequately represent diverse user groups. For example, job recommendation systems might steer women away from technical positions based on historical hiring patterns, or content moderation tools might flag posts from certain communities at higher rates due to biased training data.

Addressing these challenges requires diverse representation in design and development teams, comprehensive testing across different user groups, and ongoing monitoring for discriminatory outcomes. Teams must conduct user research that includes participants from marginalized communities and test how design decisions affect users in different cultural contexts.

Implementation also involves providing equal access to platform features regardless of users’ economic status, geographic location, or device capabilities. This means ensuring platforms function effectively on older devices, work with limited internet connectivity, and don’t exclude users based on their ability to pay for premium features that provide basic functionality.

Regular bias audits help identify when algorithms produce unfair outcomes. These assessments examine whether recommendation systems, search results, content visibility, and other automated decisions treat different user groups equitably. When bias is identified, teams must implement corrective measures rather than simply documenting the problem.

Environmental Sustainability and Responsible Innovation

Some platforms are beginning to integrate sustainability considerations into their core features. For example, some e-commerce platforms now display carbon footprint information for products, while others promote locally-sourced items to reduce shipping-related emissions.

An example of an environmentally sustainable digital practice is the search engine Ecosia, which uses its ad revenue to plant trees and actively supports eco-friendly digital ecosystems.

Security and Data Protection

Security encompasses protecting user data from breaches, ensuring platform integrity against malicious actors, and building user confidence through transparent security practices. This principle requires implementing comprehensive security measures while communicating about those protections in ways users can understand.

Robust security implementation includes regular security audits, penetration testing, secure coding practices, and incident response procedures. Platforms must also protect against emerging threats like social engineering attacks, account takeovers, and data harvesting by malicious third parties.

User education plays a crucial role in security. Platforms should provide clear guidance about creating strong passwords, recognizing phishing attempts, and understanding privacy settings. However, security shouldn’t depend entirely on user knowledge—systems should be designed to be secure by default rather than requiring users to become security experts.

Transparency about security practices helps build user trust while maintaining actual security. This involves explaining what protections are in place, how personal data is secured, what happens in case of a security incident, and how users can enhance their own security without revealing information that could be exploited by attackers.

Regular security audits and public security reports demonstrate commitment to protection. Some platforms publish transparency reports detailing security incidents, government requests for user data, and steps taken to improve protection over time.

Implementing Ethical Design in Digital Platforms

Design Process Integration

Successful implementation of ethical design principles requires systematic integration throughout the entire product development lifecycle rather than treating ethics as a final check before launch. This approach ensures ethical considerations shape fundamental design decisions rather than attempting to retrofit ethical features onto completed products. Ethical design practices can prevent legal and ethical issues, such as data breaches and discriminatory practices, by embedding responsible practices from the outset.

The design process should begin with ethical impact assessments during initial product planning phases. These assessments examine potential positive and negative consequences of proposed features, identify vulnerable user populations who might be particularly affected, and establish success metrics that include ethical outcomes alongside business objectives.

Cross-functional ethics committees provide essential oversight and guidance throughout development. These committees should include representatives from design, engineering, legal, user advocacy, and relevant domain expertise. Their role involves reviewing proposed features for ethical implications, providing guidance when teams face ethical dilemmas, and ensuring organizational commitment to ethical principles remains strong even when facing competitive pressure.

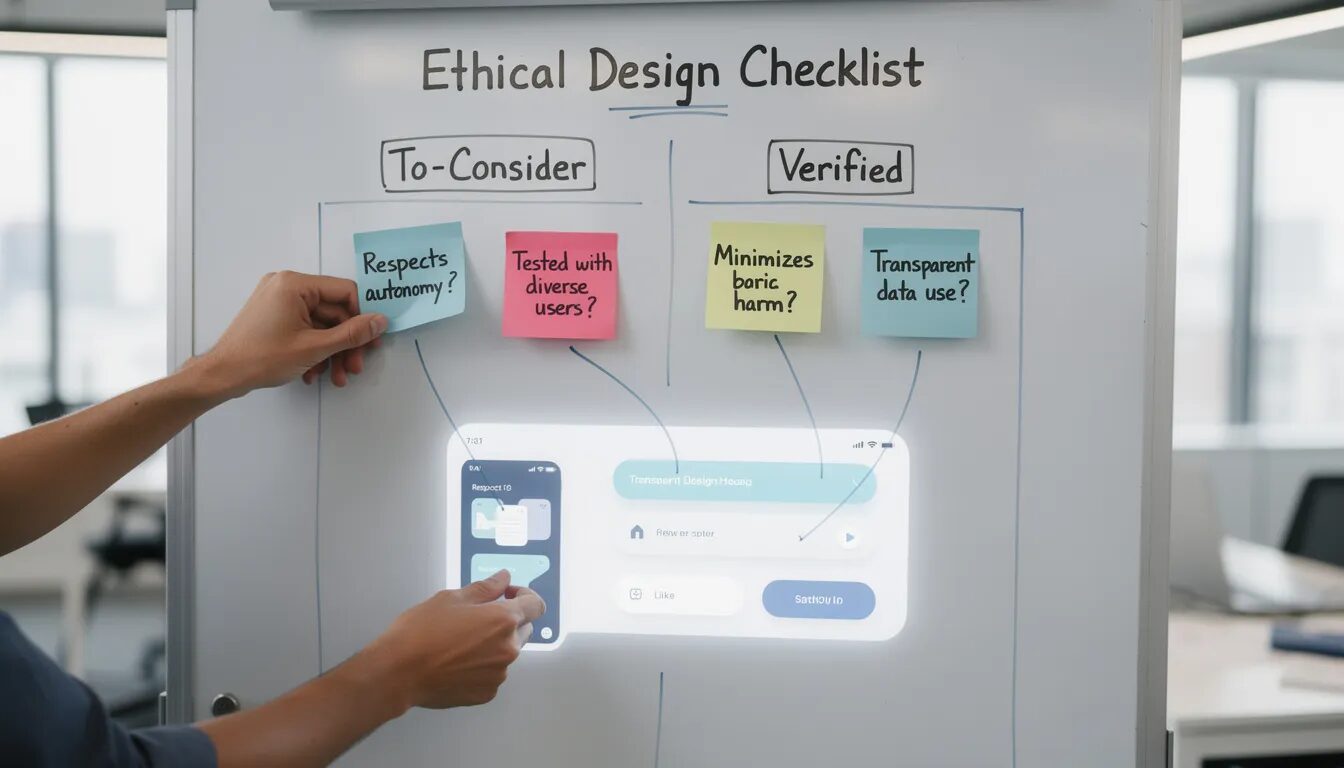

Ethical design checklists help teams systematically evaluate design decisions during development. These checklists might include questions like: “Does this feature respect user autonomy?” “Could this design pattern trick users into unwanted actions?” “Have we tested this feature with diverse user groups?” “What are the long-term consequences of this design choice?”

Documentation of ethical decisions creates organizational learning opportunities and accountability. Teams should record the ethical considerations that influenced major design choices, the trade-offs they considered, and the reasoning behind their final decisions. This documentation helps future teams learn from past experience and provides transparency about how ethical principles influence product development.

Team Training and Culture

Building ethical design capabilities requires comprehensive training that goes beyond one-time workshops to create ongoing learning opportunities. Teams need to understand both the technical aspects of implementing ethical features and the broader social context in which their products operate.

Regular training should cover ethical design principles, accessibility standards, privacy regulations, and cultural sensitivity. However, effective training goes beyond theoretical knowledge to include practical skills like conducting inclusive user research, identifying potential bias in algorithms, and designing transparent user interfaces.

Encouraging ethical decision-making requires clear escalation paths when teams identify potential ethical issues. Team members should know how to raise concerns, who to contact when facing ethical dilemmas, and how the organization will respond to good-faith ethical concerns. This includes protection for employees who raise concerns about potentially harmful features or practices.

Reward systems should recognize teams and individuals who proactively identify and address ethical issues. This might include highlighting ethical innovations in company communications, incorporating ethical considerations into performance evaluations, and celebrating teams that choose ethical approaches even when they require additional time or resources.

Case study documentation of ethical dilemmas and solutions creates valuable organizational learning resources. These case studies should explain the ethical challenge, the options considered, the decision made, and the outcomes achieved. This documentation helps teams learn from both successes and failures in implementing ethical design principles.

Avoiding Dark Patterns and Manipulative Design

Dark patterns represent one of the most direct ethical violations in digital platform design. These deliberately deceptive practices are designed to deceive users into taking unintended actions, which can expose platforms to significant ethical and legal risks. Eliminating dark patterns requires understanding common manipulative techniques and implementing alternative approaches that respect user agency.

Common dark patterns include hidden subscription renewals where users don’t realize they’re signing up for recurring charges, confusing cancellation processes that make it difficult to unsubscribe from services, forced account creation when users just want to browse or make a single purchase, and misleading language that obscures what users are agreeing to.

The “roach motel” pattern makes it easy to get into a situation but difficult to get out. For example, some platforms make it simple to subscribe to premium features but require multiple steps, phone calls, or waiting periods to cancel. Ethical alternatives involve making cancellation as simple as subscription and providing clear information about cancellation policies upfront.

Bait-and-switch tactics promise one thing but deliver another. This might involve advertising low prices that are only available under impossible conditions or promoting “free” services that actually require payment to use basic features. Ethical approaches involve honest advertising and transparent pricing that clearly explains what users will receive and what they’ll be charged.

“Confirm-shaming” uses language that makes users feel bad about choosing the option the platform doesn’t want them to select. For example, an unsubscribe button might say “No thanks, I don’t want to save money” instead of simply “Unsubscribe.” Ethical alternatives use neutral language that doesn’t attempt to manipulate users’ emotions.

Regulatory requirements increasingly prohibit dark patterns. The California Privacy Rights Act (CPRA) explicitly prohibits dark patterns in privacy interfaces, requiring that consent mechanisms be equally prominent and easy to use regardless of whether users are opting in or out. The EU Digital Services Act similarly requires platforms to avoid design patterns that materially distort users’ ability to make free and informed decisions.

Implementation of ethical alternatives often improves long-term business outcomes even when it reduces short-term metrics. Platforms that respect user autonomy tend to build stronger user trust, reduce customer service complaints, and avoid regulatory penalties. Users who feel respected are more likely to become loyal customers and recommend the platform to others.

Real-World Success Stories

Mastodon’s Decentralized Approach

Mastodon represents a fundamentally different approach to social media platform design, prioritizing user control and community governance over centralized corporate control. Unlike traditional social platforms, Mastodon operates as a federation of independently-operated servers that can communicate with each other while maintaining local community standards and moderation practices.

This decentralized model addresses several ethical challenges common to centralized platforms. Users can choose servers that align with their values, communities can establish their own moderation policies, and no single entity has the power to unilaterally change how the entire network operates. When users disagree with their server’s policies, they can move to a different server while maintaining their social connections.

Mastodon’s approach to algorithmic transparency also differs significantly from commercial platforms. Rather than using engagement-maximizing algorithms, Mastodon provides chronological feeds that show posts in the order they were published. Users can see everything posted by accounts they follow without algorithmic filtering that might hide certain content to increase engagement.

The platform’s funding model avoids advertising-driven incentives that often conflict with user well being. Instead, Mastodon servers are typically funded through donations from users who appreciate the service, creating alignment between user satisfaction and platform sustainability.

Spotify’s Accessibility Improvements

Spotify has made significant investments in accessibility that demonstrate how major platforms can prioritize inclusive design. Their accessibility improvements include comprehensive voice control features that allow users to navigate the platform, search for music, and control playback without using visual interfaces or precise motor controls.

The platform’s simplified navigation modes help users with cognitive disabilities while also benefiting users who prefer streamlined interfaces. These modes reduce visual complexity and provide clearer pathways to common actions like playing music or creating playlists.

Spotify’s approach to accessibility involves ongoing collaboration with disabled users rather than simply implementing features and assuming they work effectively. They conduct regular usability testing with users who have various disabilities and incorporate feedback into feature improvements and new development.

Their audio content accessibility extends beyond music to include enhanced support for podcasts with transcripts, chapter markers, and adjustable playback speeds. These features help users with different accessibility needs while also providing benefits for language learners and users who prefer to consume content in different ways.

Patagonia’s Digital Platform Sustainability

Patagonia has integrated environmental sustainability into their digital platform strategy, using their online presence to promote sustainable consumption patterns rather than simply maximizing sales. Their “Don’t Buy This Jacket” campaign encouraged customers to consider whether they actually needed new products before purchasing.

Their digital platforms provide extensive information about product durability, repairability, and environmental impact. Rather than hiding information that might discourage purchases, they proactively educate customers about making sustainable choices. This includes guidance on caring for products to extend their lifespan and information about recycling programs for worn-out items.

The company’s carbon-neutral hosting practices demonstrate commitment to reducing the environmental impact of digital operations. They’ve chosen hosting providers that use renewable energy and have implemented efficient coding practices that reduce unnecessary energy consumption.

Patagonia’s platform also facilitates circular economy practices by connecting customers with repair services, used product marketplaces, and recycling programs. These features support the company’s environmental mission while providing additional value to customers who appreciate sustainable options.

Mozilla Firefox’s Privacy-Focused Design

Mozilla Firefox has positioned privacy protection as a core feature rather than an optional add-on, demonstrating how browsers can implement privacy-by-design principles. Their Enhanced Tracking Protection blocks third-party tracking cookies by default, preventing companies from following users across websites without explicit consent.

Firefox’s approach to user education about privacy includes clear explanations of what tracking protection does, why it matters, and how users can customize their privacy settings. Rather than burying privacy controls in complex menus, they’ve made these features prominent and accessible.

The browser provides transparency about blocked trackers, showing users which companies were prevented from tracking them on each website. This visibility helps users understand the extent of online tracking and the value of privacy protection.

Mozilla’s commitment to open-source development allows security researchers and privacy advocates to audit Firefox’s privacy protections and suggest improvements. This transparency builds user trust while enabling continuous improvement of privacy features.

Regulatory Compliance and Legal Requirements

The regulatory landscape for digital platform ethics is evolving rapidly, with new requirements taking effect throughout 2025 and beyond. Ethical design promotes long-term sustainability, both environmentally and socially, contributing to a positive brand image. Understanding these legal requirements is essential for implementing ethical design principles, as compliance failures can result in significant penalties while successful compliance often aligns with broader ethical objectives.

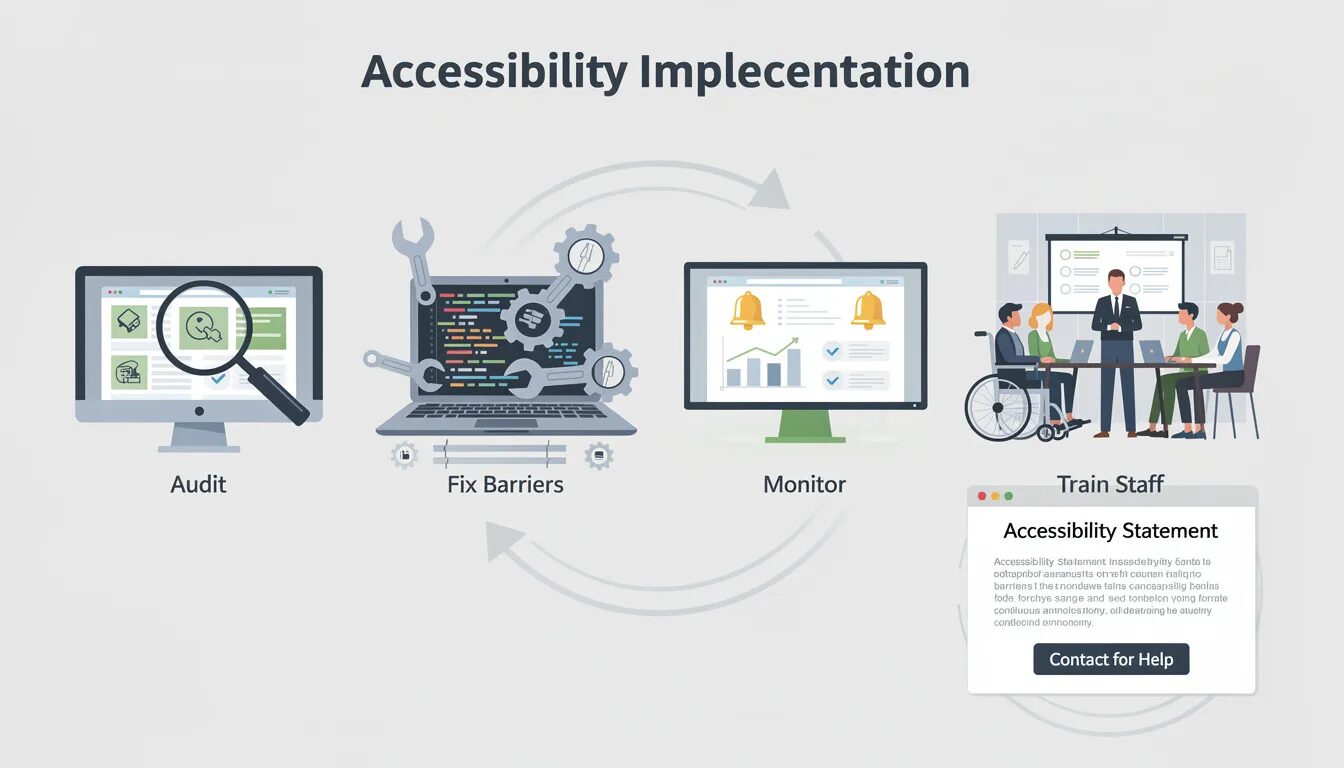

The European Accessibility Act becomes effective June 28, 2025, requiring digital platforms serving EU users to meet comprehensive accessibility standards. This regulation covers e-commerce platforms, banking services, e-books, and other digital services, with potential fines ranging from €5,000 to €20,000 per violation depending on the severity and scope of accessibility barriers.

Implementation requires conducting accessibility audits, remediating identified barriers, establishing ongoing monitoring processes, and training staff on accessibility requirements. Organizations must also provide accessibility statements explaining their compliance efforts and offering contact information for users who encounter accessibility problems.

The Federal Trade Commission has strengthened enforcement of deceptive design practices throughout 2025, particularly targeting dark patterns that trick users into unwanted subscriptions, misleading privacy practices, and interfaces that make it difficult to exercise consumer rights. Recent enforcement actions have resulted in multimillion-dollar penalties for platforms that used manipulative design patterns.

GDPR compliance continues evolving with new guidance on algorithmic decision-making, artificial intelligence systems, and cross-border data transfers. The regulation’s emphasis on privacy by design aligns closely with ethical design principles, requiring platforms to implement data protection measures throughout the design process rather than adding them afterward.

California’s Privacy Rights Act (CPRA) and similar state privacy laws create a patchwork of requirements that often exceed federal standards. These laws typically require prominent privacy controls, prohibition of dark patterns in consent interfaces, and clear disclosure of data sharing practices. As more states adopt similar legislation, platforms increasingly need to implement privacy-protective features nationally rather than creating state-specific variations.

The EU AI Act introduces new requirements for AI systems used in digital platforms, including transparency obligations for recommendation algorithms, bias testing requirements, and user notification when interacting with AI-generated content. These requirements will significantly impact platforms that use artificial intelligence for content recommendation, content moderation, or automated decision-making.

Preparing for emerging AI governance regulations requires implementing explainable AI systems, conducting bias audits, and establishing processes for human oversight of automated decisions. Organizations should also document their AI development and deployment processes to demonstrate compliance with evolving regulatory requirements.

International compliance becomes increasingly complex as different jurisdictions develop varying requirements for platform governance. Organizations operating globally must balance compliance with multiple regulatory frameworks while maintaining consistent user experiences across different markets.

Measuring Ethical Design Success

Measuring the success of ethical design implementation requires developing metrics that go beyond traditional business indicators to capture outcomes related to user well being, social impact, and long-term sustainability. These measurements help organizations understand whether their ethical design efforts are actually achieving their intended goals and identify areas needing improvement.

User trust metrics provide crucial insights into whether ethical design efforts are building genuine user confidence. Regular surveys can assess user perceptions of platform transparency, privacy protection, and respect for user autonomy. Net Promoter Score assessments specifically focused on trust and ethics can reveal whether users feel comfortable recommending the platform to others based on its ethical practices.

These trust measurements should be segmented across different user groups to ensure that ethical design improvements benefit all users equally. Aggregate trust scores might mask disparities where certain user populations feel less protected or respected by platform design decisions.

Accessibility compliance monitoring involves both automated testing tools and direct user feedback from people with disabilities. Automated tools can identify technical accessibility violations like missing alt text or insufficient color contrast, but human testing reveals whether accessibility features actually enable effective platform use.

Regular accessibility audits should include users with various types of disabilities testing core platform functionality. These sessions help identify usability barriers that might not be apparent to non-disabled designers and provide insights into how accessibility improvements affect overall user experience.

Transparency effectiveness can be measured through user comprehension studies that assess whether privacy policies, algorithmic explanations, and consent flows actually help users understand platform operations. High scores on transparency metrics might be meaningless if users don’t actually understand the information being provided.

These studies often reveal significant gaps between what organizations think they’re communicating and what users actually understand. For example, platforms might provide detailed privacy policies that score well on comprehensiveness but fail to help users make informed decisions about their personal information.

Long-term user engagement patterns provide insights into whether ethical design practices support sustainable growth rather than manipulative engagement tactics. Healthy engagement metrics might include users taking deliberate breaks from the platform, using privacy controls effectively, and maintaining consistent usage patterns over time rather than showing addictive usage spikes followed by abandonment.

Algorithmic fairness metrics require ongoing monitoring to ensure that recommendation systems, content moderation, and other automated decisions treat different user groups equitably. This involves analyzing whether algorithm outputs show systematic bias based on user demographics, geographic location, or other characteristics.

Environmental impact measurement includes both direct platform energy consumption and indirect effects on user behavior. Organizations should track their carbon footprint from data centers and encourage sustainable user behaviors through platform design choices.

Future Trends in Ethical Digital Platform Design

The future of ethical digital platform design will be shaped by emerging technologies, evolving regulatory frameworks, and growing user awareness of digital rights and platform impacts. Understanding these trends helps organizations prepare for changing expectations and requirements while identifying opportunities to lead in ethical innovation.

Environmental sustainability will become increasingly central to platform design as climate change concerns intensify and regulatory frameworks begin requiring environmental impact disclosure. Future platforms will likely need to provide carbon footprint tracking for user activities, implement more aggressive energy efficiency measures, and design features that actively promote sustainable behaviors.

This trend extends beyond operational efficiency to fundamental questions about digital consumption patterns. Platforms may need to balance growth objectives with environmental responsibility, potentially implementing features that encourage mindful usage rather than maximizing engagement time.

Algorithmic transparency mandates will likely expand significantly, requiring platforms to provide more detailed explanations of how automated decisions are made. This might include “algorithmic nutrition labels” that explain recommendation systems in standardized formats, similar to how food products display nutritional information.

Users increasingly expect to understand and control the algorithms that shape their information diet. Future platforms may need to provide multiple algorithmic options, allowing users to choose recommendation systems that align with their values and preferences rather than accepting a one-size-fits-all approach.

Expanded accessibility requirements will likely cover cognitive disabilities and emerging assistive technologies more comprehensively. As understanding of neurodiversity grows, platforms will need to design for a broader range of cognitive differences and provide more customization options for information processing and interface interaction.

Virtual and augmented reality platforms will face entirely new accessibility challenges as these technologies become more mainstream. Designing ethical VR/AR experiences will require considering physical safety, psychological well-being, and accessibility for users with various sensory and motor abilities.

Global harmonization of privacy standards may eventually create more consistent requirements across different jurisdictions, simplifying compliance while potentially raising baseline privacy protections worldwide. However, this harmonization process will likely involve complex negotiations between different regulatory approaches and cultural values.

Cross-border data governance frameworks will need to address the reality that digital platforms operate globally while different countries maintain varying data protection requirements. Future solutions may involve technical approaches that provide different privacy protections based on user location and preferences.

User ownership and control of digital infrastructure may become more prevalent as dissatisfaction with centralized platform control grows. This could involve cooperative ownership models, blockchain-based platforms that distribute control among users, or public digital infrastructure initiatives that prioritize user rights over profit maximization.

These alternative governance models represent fundamental shifts in how digital platforms are owned and controlled, moving from corporate-controlled services toward more democratic or community-governed approaches. The success of these alternatives will significantly influence future platform development and regulation.

Artificial intelligence governance will likely become more sophisticated as AI systems become more powerful and pervasive in platform operations. This includes requirements for bias testing, human oversight of automated decisions, and user rights to explanation when AI systems affect them significantly.

The challenge of governing AI systems while preserving their beneficial capabilities will require careful balance between innovation and protection. Future regulations may provide frameworks for responsible AI development while avoiding stifling beneficial applications.

Conclusion

Implementing ethical design principles for digital platforms isn’t just the right thing to do—it’s become essential for sustainable business success in an increasingly regulated and user-aware digital landscape. As we’ve explored throughout this guide, ethical design encompasses far more than avoiding obvious harm; it requires proactive commitment to user well being, environmental sustainability, social justice, and democratic values.

The seven core principles we’ve examined—user autonomy, accessibility, transparency, privacy protection, fairness, environmental responsibility, and security—provide a comprehensive framework for creating digital products that truly serve their users and society. However, implementing these principles successfully requires systematic integration throughout the design process, organizational commitment to ethical values, and continuous learning from real world examples and user feedback.

The regulatory landscape is rapidly evolving to require many ethical design practices that were once considered optional best practices. Organizations that begin implementing ethical design principles now will be better positioned to comply with emerging requirements while building user trust and competitive advantage.

Most importantly, ethical design represents an ongoing process rather than a destination. As digital technologies continue evolving and our understanding of their social impacts deepens, ethical design practices must adapt and improve. This requires maintaining diverse perspectives on design teams, conducting ongoing evaluation of ethical performance, and remaining committed to user well being even when it conflicts with short-term business metrics.

The future of digital platforms depends on our collective commitment to designing technology that enhances rather than diminishes human flourishing. By implementing ethical design principles systematically and continuously improving our approaches based on evidence and user feedback, we can create digital environments that truly empower users and contribute positively to society.

Starting your ethical design journey begins with an honest assessment of current practices, identification of areas for improvement, and commitment to ongoing learning and adaptation. The principles and strategies outlined in this guide provide a foundation, but ethical design success ultimately depends on organizational dedication to putting ethical considerations at the center of product development decisions.

Overcoming Ethical Challenges

Overcoming ethical challenges in digital platform design requires a proactive and holistic approach that embeds ethical considerations into every phase of the design process. Ethical design principles—such as transparency, accountability, and respect for user autonomy—should not be afterthoughts, but core components guiding all design decisions. This means that from the earliest stages of ideation through to launch and beyond, teams must be vigilant about the ethical implications of their choices.

A critical first step is conducting thorough user research to uncover potential ethical challenges that may not be immediately obvious. Engaging with a broad range of users, including those from diverse backgrounds and with different abilities, helps identify where design solutions might inadvertently trick users or create barriers to well being. For example, usability testing can reveal the presence of dark patterns—design tactics that manipulate or confuse users into taking actions they did not intend. By identifying these issues early, teams can refine their design practices to eliminate manipulative elements and ensure that user autonomy is respected.

Integrating diverse perspectives throughout the design process is essential for anticipating and addressing complex ethical challenges. This includes involving stakeholders from different cultural contexts, disciplines, and lived experiences to ensure that design solutions are inclusive and socially responsible. Regularly revisiting and updating design principles in light of new findings and societal shifts helps teams stay aligned with evolving ethical standards.

Ultimately, overcoming ethical challenges is an ongoing process that requires commitment to continuous learning and adaptation. By prioritizing ethical design practices, conducting robust user research, and rigorously testing for unintended consequences, organizations can create products that not only avoid harm but actively promote positive outcomes for users and society at large.

Effective Design Practices

Effective design practices are the foundation of creating digital products that are both user-friendly and ethically sound. Applying ethical design principles—such as accessibility, privacy protection, and a focus on user well-being—ensures that the design process consistently prioritizes the needs and rights of users. This approach goes beyond compliance, aiming to foster user trust and contribute to a more ethical digital world.

A key element of effective design is conducting user research with diverse user groups. By engaging with people from various backgrounds, abilities, and cultural contexts, designers can better understand complex ethical challenges and develop solutions that are inclusive and socially responsible. This diversity of input helps anticipate unintended consequences and ensures that products serve the broadest possible audience.

Responsible innovation is another cornerstone of ethical design. Designers should consider the ethical implications of data collection and data practices, ensuring that only the data necessary for the product’s functionality is gathered and that users are informed about how their data will be used. Transparent data practices not only protect user privacy but also build lasting user trust.

Environmental sustainability should also be integrated into design practices. This means considering the environmental impact of digital products, from energy consumption to the lifecycle of devices and services. By prioritizing sustainable choices, designers contribute to the well being of both users and the planet.

Continuous learning and ongoing evaluation of ethical performance are essential in a rapidly evolving digital landscape. As new technologies like artificial intelligence and AI systems introduce novel ethical challenges, teams must remain vigilant, updating their practices to address emerging risks and opportunities. Regularly assessing the impact of design decisions on human rights, societal values, and human well being ensures that ethical design remains a living, adaptive process.

In summary, ethical design refers to the practice of creating digital products that respect human rights, promote well being, and align with societal values. By embedding these principles into every stage of the design process, organizations can ensure their products are not just a trend, but a lasting force for good in today’s digital age.